A Step-by-Step Guide to Slashing Your AWS Bill by 50%

As businesses increasingly move their workloads to the cloud, managing cloud costs has become a critical concern. At Tidal.Cloud, with the roll-out of new features such as Tidal Accelerator’s Business Analytics, we recently realized that our AWS costs were steadily increasing, and we needed to take action to optimize our cloud spending.

In this blog post, we’ll share the step-by-step process we followed to identify cost-saving opportunities and implement strategies to reduce our AWS bill by a whopping 50%. Whether you’re just getting started with cloud cost optimization or looking to take your efforts to the next level, this guide will provide you with actionable insights and practical tips.

Step 1: Enable the AWS Cost Optimization Hub

The first step in our cloud cost optimization journey was to enable the AWS Cost Optimization Hub. This powerful feature provides detailed insights into your AWS spending and offers recommendations for cost savings.

To get started, enable the Cost Optimization Hub on the AWS Billing and Cost Management Console. Once enabled, select a specific period for analysis, and AWS will start analyzing your resource usage and providing tailored recommendations based on your specific workloads.

We focused on a review period of the past three months to ensure the recommendations were based on our most recent usage trends. Read more on how to get started here.

Step 2: Know What You Have

We like to drink our own champagne here at Tidal, so the next step for us was to audit our cloud inventory aligned with the business applications that we track in Tidal Accelerator. Apps and associated resources that are no longer adding value are retired, and the resources spun down. We mostly use Terraform for infrastructure as code here, so a simple “terraform destroy” command cleans up old application environments very quickly.

With the cleanup and rationalization complete, Step 3 is to identify and implement quick wins on the remaining estate.

Step 3: Identify and Implement Quick Wins

With the Cost Optimization Hub enabled and our inventory rationalized, we were able to quickly identify some low-hanging fruit for cost savings on the resources we needed to keep.

For us, purchasing Compute Savings Plans was a straightforward decision that led to an estimated 25% reduction in costs. These plans offer discounted rates for committed usage of EC2 instances, and could be implemented swiftly for immediate cost savings on our consistent workloads. By completing the cleanup and spin-down process first, we avoided committing to savings plans for resources that we didn’t need.

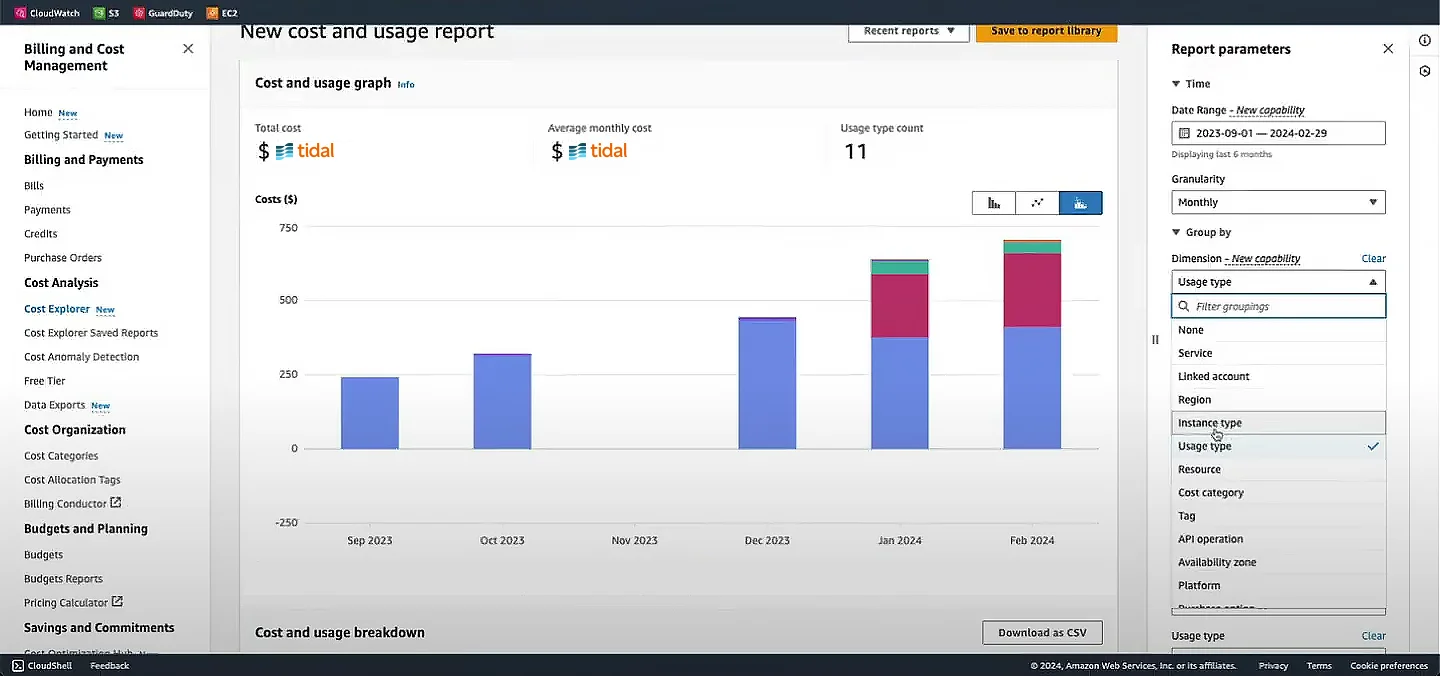

AWS Cost Explorer

Step 4: Develop a Long-Term Cost Optimization Strategy

While the quick wins were a great starting point, we knew that achieving significant and sustainable cost savings would require a more comprehensive strategy. We identified several long-term initiatives, including:

Migrating to ARM64 Graviton Instances

AWS offers ARM-based Graviton instances that provide excellent performance at a lower cost compared to traditional x86 instances. We identified workloads that could be migrated to Graviton instances, potentially saving us a substantial amount on compute costs without sacrificing performance.

Using Reserved Instances for RDS

Similar to Compute Savings Plans for EC2, AWS offers Reserved Instances for RDS databases, which can provide significant discounts for committed usage of database services compared to on-demand pricing.

Shifting Database Workloads to Burstable Instances

After analyzing our database workloads, we realized that many of them were memory-bound and could potentially run on burstable instances, which are more cost-effective for workloads with intermittent CPU usage.

Introducing Database Caching Layer

A caching layer using AWS ElastiCache allows us to reduce the number of read-replica database nodes that we have. This reduced our compute and licensing costs while improving data-read performance by 80x.

Optimizing Storage with gp3 EBS Volumes

AWS recently introduced gp3 EBS volumes, which offer more flexible and cost-effective provisioning of IOPS and throughput. We identified opportunities to migrate our existing EBS volumes to the newer gp3 type for better performance and cost savings.

Right-sizing Compute Resources

We identified instances that were over-provisioned or under-utilized based on our workload demands, allowing us to right-size these resources and optimize costs.

You can also take advantage of the AWS Trusted Advisor console for recommendations on compute optimization. Read more on how to get started here.

Leveraging Private Subnets and NAT Instances:

We discovered that we were paying significant costs for public IPv4 addresses and NAT Gateways. By leveraging private subnets and deploying cost-effective NAT instances, we could reduce these expenses while maintaining the necessary network connectivity. By managing our own NAT instances, especially on ARM64, costs can be drastically reduced.

Step 5: Implement Continuous Monitoring and Automation

To ensure ongoing cost optimization and prevent cost creep, we implemented several monitoring and automation measures:

Billing Alerts

We set up billing alerts to notify us when our spending exceeds predefined thresholds, allowing us to take corrective action promptly. However, be mindful that while you will be notified if you exceed a threshold, a repeat alert will not happen even if no action is taken, unless you set up a series of alerts at various thresholds.

Cost Anomaly Detection

One way to get around missing billing alerts is by using the AWS Cost Anomaly Detection, which learns from your usage patterns and triggers alerts on any abnormal activity in real-time or near-real-time. This can help to catch potential cost issues before they become significant.

Resource Tagging

We established a consistent tagging strategy to associate resources with relevant metadata (application, environment, owner). This not only improves visibility into our cost structure but also enables us to generate cost reports per application or team.

Schedulers and Auto-Scaling

For non-production environments and workloads with variable demand, we implemented schedulers and auto-scaling to automatically spin up or down resources based on actual usage, avoiding unnecessary costs.

Bonus Step: Create a Cost-Conscious Culture

Some other ways you can encourage coordinated effort on cloud cost optimization in your organization:

- Conduct regular cost review meetings to analyze cloud spending, identify new optimization opportunities, and share best practices across teams. We make use of the AWS Cost Explorer to analyze, visualize, and forecast spending.

- Provide training and resources to help teams understand the importance of cost-conscious decision-making, and how to act on identified opportunities.

- Empower teams to take an active role in optimizing cloud costs.

For more, check out our FinOps series.

Lessons Learned

By following these steps, we were able to reduce our AWS costs by a staggering 50%, with the potential to achieve even greater savings in the future. Cloud cost optimization is not a one-time exercise but a continuous journey that requires diligence, collaboration, and a commitment to constantly reevaluating and improving our cloud architecture. We hope that by sharing our experience, we can inspire and guide other organizations on their own cloud cost optimization journeys. Remember, every dollar saved on cloud costs is a dollar that can be reinvested into driving innovation and growth for your business.

About Tidal Experts

This guide was compiled based on our experiences and strategies implemented by our team, led by our Tidal Experts. Our goal is to help other AWS customers navigate the complex landscape of cloud costs and make informed decisions to optimize their cloud infrastructure.

Tidal Experts

Tidal provides a unique cloud enablement service to customers, that reduces risk and accelerates momentum to cloud.

Get Help